Stat-Ease Blog

Categories

Ask An Expert: Len Rubinstein

Next in our 40th anniversary “Ask an Expert” blog series is Leonard “Len” Rubinstein, a Distinguished Scientist at Merck. He has over 3 decades of experience in the pharmaceutical industry, with a background in immunology. Len has spent the last couple of decades working on bioanalytical development, supporting bioprocess and clinical assay endpoints. He’s also a decades-long proponent of design of experiments (DOE), so we reached out to learn what he has to say!

When did you first learn about DOE? What convinced you to try it?

I first learned about DOE in 1996. I enrolled in a six-day training course to better understand the benefits of this approach in my assay development.

What convinced you to stick with DOE, rather than going back to one-factor-at-a-time (OFAT) designs?

Once I started using the DOE approach, I was able to shorten development time but, more importantly, gained insights into understanding interactions and modeling the results to predict optimal parameters that provided the most robust and least variable bioanalytical methods. Afterward, I could never go back to OFAT!

How do you currently use & promote DOE at your company?

DOE has been used in many areas across the company for years, but it has not been explicitly used for the analytical methods supporting clinical studies. I raised awareness through presentations and some brief training sessions. Afterward, after my management adopted it, I started sponsoring the training. Since 2018, I have sponsored four in-person training sessions, each with 20 participants.

Some examples of where we used DOE can be found at the end of this interview.

What’s been your approach for spreading the word about how beneficial DOE is?

Convincing others to use DOE is about allowing them to experience the benefits and see how it’s more productive than using an OFAT approach. They get a better understanding of the boundaries of the levels of their factors to have little effect on the result and, more importantly, sometimes discard what they thought was an important factor(s) in favor of those that truly influenced their desired outcome.

Is there anything else you’d like to share to further the cause of DOE?

It would be beneficial if our scientists were exposed to DOE approaches in secondary education, be it a BA/BS, MA/MS, or PhD program. Having an introduction better prepares those who go on to develop the foundation and a desire to continue using the DOE approach and honing their skills with this type of statistical design in their method development.

And there you have it! We appreciate Len’s perspective and hope you’re able to follow in his footsteps for experimental success. If you’re a secondary education teacher and want to take Len’s advice about introducing DOE to your students, send us a note: we have “course-in-a-box” options for qualified instructors, and we offer discounts to all academics who want to use Stat-Ease software or learn DOE from us.

Len’s published research:

Whiteman, M.C., Bogardus, L., Giacone, D.G., Rubinstein, L.J., Antonello, J.M., Sun, D., Daijogo, S. and K.B. Gurney. 2018. Virus reduction neutralization test: A single-cell imaging high-throughput virus neutralization assay for Dengue. American Journal of Tropical Medicine and Hygiene. 99(6):1430-1439.

Sun, D., Hsu, A., Bogardus, L., Rubinstein, L.J., Antonello, J.M., Gurney, K.B., Whiteman, M.C. and S. Dellatore. 2021. Development and qualification of a fast, high-throughput and robust imaging-based neutralization assay for respiratory syncytial virus. Journal of Immunological Methods. 494:113054

Marchese, R.D., Puchalski, D., Miller, P., Antonello, J., Hammond, O., Green, T., Rubinstein, L.J., Caulfield, M.J. and D. Sikkema. 2009. Optimization and validation of a multiplex, electrochemiluminescence-based detection assay for the quantitation of immunoglobulin G serotype-specific anti-pneumococcal antibodies in human serum. Clinical and Vaccine Immunology. 16(3):387-396.

Know the SCOR for a winning strategy of experiments

Observing process improvement teams at Imperial Chemical Industries in the late 1940s George Box, the prime mover for response surface methods (RSM), realized that as a practical matter, statistical plans for experimentation must be very flexible and allow for a series of iterations. Box and other industrial statisticians continued to hone the strategy of experimentation to the point where it became standard practice for stats-savvy industrial researchers.

Via their Management and Technology Center (sadly, now defunct), Du Pont then trained legions of engineers, scientists, and quality professionals on a “Strategy of Experimentation” called “SCO” for its sequence of screening, characterization and optimization. This now-proven SCO strategy of experimentation, illustrated in the flow chart below, begins with fractional two-level designs to screen for previous unknown factors. During this initial phase, experimenters seek to discover the vital few factors that create statistically significant effects of practical importance for the goal of process improvement.

The ideal DOE for screening resolves main effects free of any two-factor interactions (2FI’s) in broad and shallow two-level factorial design. I recommend the “resolution IV” choices color-coded yellow on our “Regular Two-Level” builder (shown below). To get a handy (pun intended) primer on resolution, watch at least the first part of this Institute of Quality and Reliability YouTube video on Fractional Factorial Designs, Confounding and Resolution Codes.

If you would like to screen more than 8 factors, choose one of our unique “Min-Run Screen” designs. However, I advise you accept the program default to add 2 runs and make the experiment less susceptible to botched runs.

Stat-Ease® 360 and Design-Expert® software conveniently color-code and label different designs.

After throwing the trivial many factors off to the side (preferably by holding them fixed or blocking them out), the experimental program enters the characterization phase (the “C”) where interactions become evident. This requires a higher-resolution of V or better (green Regular Two-Level or Min-Run Characterization), or possibly full (white) two-level factorial designs. Also, add center points at this stage so curvature can be detected.

If you encounter significant curvature (per the very informative test provided in our software), use our design tools to augment your factorial design into a central composite for response surface methods (RSM). You then enter the optimization phase (the “O”).

However, if curvature is of no concern, skip to ruggedness (the “R” that finalizes the “SCOR”) and, hopefully, confirm with a low resolution (red) two-level design or a Plackett-Burman design (found under “Miscellaneous” in the “Factorial” section). Ideally you then find that your improved process can withstand field conditions. If not, then you will need to go back up to the beginning for a do-over.

The SCOR strategy, with some modification due to the nature of mixture DOE, works equally well for developing product formulations as it does for process improvement. For background, see my October 2022 blog on Strategy of Experiments for Formulations: Try Screening First!

Stat-Ease provides all the tools and training needed to deploy the SCOR strategy of experiments. For more details, watch my January webinar on YouTube. Then to master it, attend our Modern DOE for Process Optimization workshop.

Know the SCOR for a winning strategy of experiments!

Dive into Diagnostics for DOE Model Discrepancies

Note: If you are interested in learning more, and to see these graphs in action, check out this YouTube video “Dive into Diagnostics to Discover Data Discrepancies”

The purpose of running a statistically designed experiment (DOE) is to take a strategically selected small sample of data from a larger system, and then extract a prediction equation that appropriately models the overall system. The statistical tool used to relate the independent factors to the dependent responses is analysis of variance (ANOVA). This article will lay out the key assumptions for ANOVA and how to verify them using graphical diagnostic plots.

The first assumption (and one that is often overlooked) is that the chosen model is correct. This means that the terms in the model explain the relationship between the factors and the response, and there are not too many terms (over-fitting), or too few terms (under-fitting). The adjusted R-squared and predicted R-squared values specify the amount of variation in the data that is explained by the model, and the amount of variation in predictions that is explained by the model, respectively. A lack of fit test (assuming replicates have been run) is used to assess model fit over the design space. These statistics are important but are outside the scope of this article.

The next assumptions are focused on the residuals—the difference between an actual observed value and its predicted value from the model. If the model is correct (first assumption), then the residuals should have no “signal” or information left in them. They should look like a sample of random variables and behave as such. If the assumptions are violated, then all conclusions that come from the ANOVA table, such as p-values, and calculations like R-squared values, are wrong. The assumptions for validity of the ANOVA are that the residuals:

- Are (nearly) independent,

- Have a mean = 0,

- Have a constant variance,

- Follow a well-behaved distribution (approximately normal).

Independence: since the residuals are generated based on a model (the difference between actual and predicted values) they are never completely independent. But if the DOE runs are performed in a randomized order, this reduces correlations from run to run, and independence can be nearly achieved. Restrictions on the randomization of the runs degrade the statistical validity of the ANOVA. Use a “residuals versus run order” plot to assess independence.

Mean of zero: due to the method of calculating the residuals for the ANOVA in DOE, this is given mathematically and does not have to be proven.

Constant variance: the response values will range from smaller to larger. As the response values increase, the residuals should continue to exhibit the same variance. If the variation in the residuals increases as the response increases, then this is non-constant variance. It means that you are not able to predict larger response values as precisely as smaller response values. Use a “residuals versus predicted value” graph to check for non-constant variance or other patterns.

Well-behaved (nearly normal) distribution: the residuals should be approximately normally distributed, which you can check on a normal probability plot.

A frequent misconception by researchers is to believe that the raw response data needs to be normally distributed to use ANOVA. This is wrong. The normality assumption is on the residuals, not the raw data. A response transformation such as a log may be used on non-normal data to help the residuals meet the ANOVA assumptions.

Repeating a statement from above, if the assumptions are violated, then all conclusions that come from the ANOVA table, such as p-values, and calculations like R-squared values, are wrong, at least to some degree. Small deviations from the desired assumptions are likely to have small effects on the final predictions of the model, while large ones may have very detrimental effects. Every DOE needs to be verified with confirmation runs on the actual process to demonstrate that the results are reproducible.

Good luck with your experimentation!

New Software Features! What's in it for You?

There are a couple features in the latest release of Design-Expert and Stat-Ease 360 software programs (version 22.0) that I really love, and wanted to draw your attention to. These features are accessible to everyone, no matter if you are a novice or an expert in design of experiments.

First, the Analysis Summary in the Post Analysis section: This provides a quick view of all response analyses in a set of tables, making it easy to compare model terms, statistics such as R-squared values, equations and more. We are pleased to now have this feature that has been requested many times! When you have a large number of responses, understanding the similarities and differences between the model may lead to additional insights to your product or process.

Second, the Custom Graphs (previously Graph Columns): Functionality and flexibility have been greatly expanded so that you can now plot analysis or diagnostic values, as well as design column information. Customize the colors, shapes and sizes of the points to tell your story in the way that makes sense to your audience.

Figure 1 (left) shows the layout of points in a central composite design, where the points are colored by the their space point type (factorial, axial or center points) and then sized by the response value. We can visualize where in the design space the responses are smaller versus larger.

In Figure 2 (right), I had a set of existing runs that I wanted to visualize in the design space. Then I augmented the design with new runs. I set the Color By option to Block to clearly see the new (green) runs that were added to the design space.

These new features offer many new ways to visualize your design, response data, and other pieces of the analysis. What stories will you tell?

Augmenting One-Factor-at-a-Time Data to Build a DOE

I am often asked if the results from one-factor-at-a-time (OFAT) studies can be used as a basis for a designed experiment. They can! This augmentation starts by picturing how the current data is laid out, and then adding runs to fill out either a factorial or response surface design space.

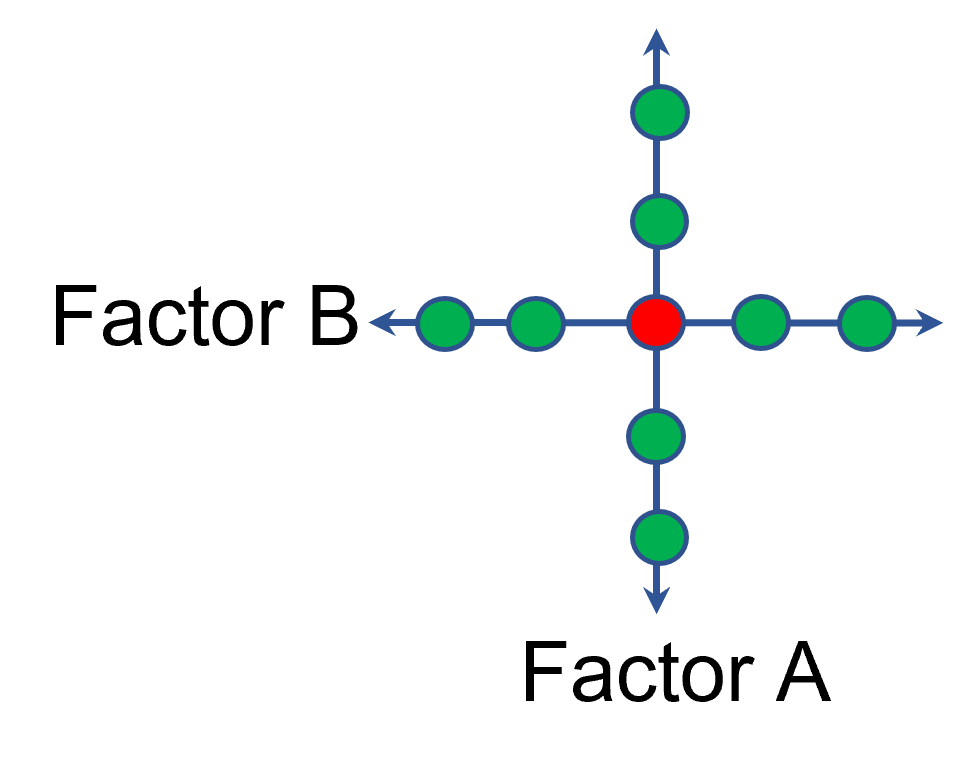

One way of testing multiple factors is to choose a starting point and then change the factor level in the direction of interest (Figure 1 – green dots). This is often done one variable at a time “to keep things simple”. This data can confirm an improvement in the response when any of the factors are changed individually. However, it does not tell you if making changes to multiple factors at the same time will improve the response due to synergistic interactions. With today’s complex processes, the one-factor-at-a-time experiment is likely to provide insufficient information.

Figure 1: OFAT

The experimenter can augment the existing data by extending a factorial box/cube from the OFAT runs and completing the design by running the corner combinations of the factor levels (Figure 2 – blue dots). When analyzing this data together, the interactions become clear, and the design space is more fully explored.

Figure 2: Fill out to factorial region

In other cases, OFAT studies may be done by taking a standard process condition as a starting point and then testing factors at new levels both lower and higher than the standard condition (see Figure 3). This data can estimate linear and nonlinear effects of changing each factor individually. Again, it cannot estimate any interactions between the factors. This means that if the process optimum is anywhere other than exactly on the lines, it cannot be predicted. Data that more fully covers the design space is required.

Figure 3: OFAT

A face-centered central composite design (CCD)—a response surface method (RSM)—has factorial (corner) points that define the region of interest (see Figure 4 – added blue dots). These points are used to estimate the linear and the interaction effects for the factors. The center point and mid points of the edges are used to estimate nonlinear (squared) terms.

Figure 4: Face-Centered CCD

If an experimenter has completed the OFAT portion of the design, they can augment the existing data by adding the corner points and then analyzing as a full response surface design. This set of data can now estimate up to the full quadratic polynomial. There will likely be extra points from the original OFAT runs, which although not needed for model estimation, do help reduce the standard error of the predictions.

Running a statistically designed experiment from the start will reduce the overall experimental resources. But it is good to recognize that existing data can be augmented to gain valuable insights!

Learn more about design augmentation at the January webinar: The Art of Augmentation – Adding Runs to Existing Designs.