Stat-Ease Blog

Categories

Energize Two-Level Factorials - Add Center Points!

Two-level factorial designs are highly effective for discovering active factors and interactions in a process, and are optimal for fitting linear models by simply comparing low vs high factor settings. Super-charge these classic designs by adding center points!

(Read to the end for a bonus video clip!)

There is an underlying assumption that the straight-line model also fits the interior of the design space, but there is no actual check on this assumption unless center points (the mid-level) are added to the design. Figure 1 illustrates how the addition of center points helps you detect non-linearity in the middle of the experimental space.

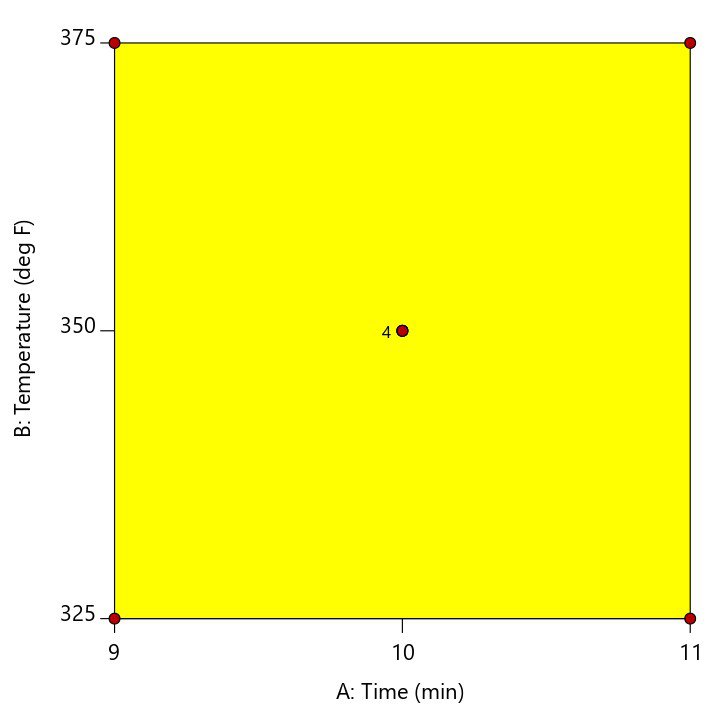

A center point is located at the exact mid-point of all factor settings. The example in Figure 2 shows a cookie baking experiment where the center point is replicated four times at the mid-point of 10 minutes and 350 degrees.

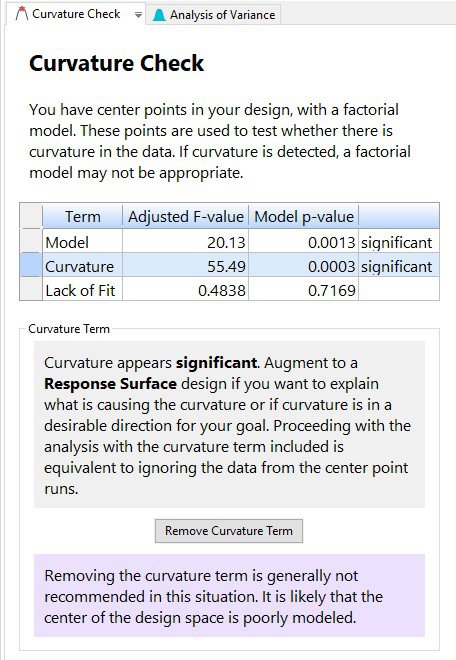

Multiple center points (replicates) should be randomized throughout the other experimental conditions to get an adequate assessment of whether the actual values measured at this point match what is predicted by the linear model. This is called a test for curvature. If the curvature test is significant, this is considered evidence that a quadratic or higher order model is required to model the relationship between the factors and the response. If the curvature test is not significant, then it is okay to assume that the linear model fits in the middle of the design space.

In Design-Expert® software, version 11, the curvature test is placed in front of the ANOVA when you have included center points in the design. This immediately shows you if the model is significant, and if the curvature is significant. As illustrated by the screen shot below (Figure 3), advice is provided to guide your next steps.

New to DX11, is the “Remove Curvature Term” button. If curvature is significant and you click on this button, then the regression modeling is done by using all the data, including the center points. Because the actual center points are not sitting in the middle of the design space, it is highly likely that the resulting model will be poorly fit and the lack of fit statistic will be significant. Then, click on “Add Curvature Term” to put the curvature effect back into the model, thus accounting for the information in the middle of the design space.

Ultimately, if curvature is significant, the recommendation is to augment the design to a response surface design to better model the relationship between the factors and the response. If curvature is NOT significant, then proceeding with the analysis is acceptable.

For further details on curvature, check out the DOE Simplified textbook, or enroll in an upcoming Modern DOE for Process Optimization workshop.

Bonus: Check out Mark’s 1-minute video on this topic: MiniTip 2 - Center points in factorials

How To Save Costly Project Engineering Time With This Innovative Application of Response Surface Models (RSM)

Lead engineers are incredibly overworked and their time is disproportionately valuable compared to some more junior engineers. But when it comes to doing project cost estimations, we don't have much of a choice but to ask some of these high-value engineers for the estimates if we want a realistic level of accuracy. Plus, the unfortunate reality is that many project costs end up being too high to justify the screening business case, so the project is canceled, and the high-value engineering time is wasted. I found a unique solution to this time sink using RSM to create a Ballpark Project Cost Estimator.

Not only did the ballpark estimator reduce engineering time spent on early phase projects, it also shortened the market opportunity evaluation timeline. This enabled a level of market opportunity screening that the organization had never experienced before. It ended up being an incredibly useful business tool that I wish more organizations had the benefit of using. I am excited to share the 5 steps to creating your own Ballpark Project Cost Estimator.

1. Build and Define Structured Cost Driver Definitions

Develop a list of items that are most likely to drive project cost. Things like new suppliers, re-use of new or existing product platforms, level of tear up/re-work, project timeline and so forth. 7-15 factors is probably reasonable. Then define very clearly what each scoring level of each factor would be. In one case, we used 1,3,9 ordinal scaling for each level, since that was the numbering we used habitually. In the example, we're using 1, 2, and 3. Any ordinal, interval or ratio scale of measurement can work as long as responses are defined clearly enough. Figure 1 shows a few examples of defined factor level descriptors.

Figure 1. Project cost driver factor level definitions example. This is the recommended level of structure around each factor scoring level.

2. Survey Qualified Personnel

Once the structure around consistently defining scores is built, ask the project manager and chief engineer to score all of the projects which were run in recent history. We used projects from the last 5–7 years, but what your organization uses will depend on project length and reasonable confidence that people will remember well enough to score fairly.

3. Analyze

With the scores for each factor and the actual finance data for each project, use the Historical Data Model in Design-Expert® software. We're going to mostly use Numeric Factors with Discrete Responses at 3 levels. If you decide to define your inputs on a sliding scale, then you would do this differently.

Figure 2. Historical Data Design for the sample project. We’re using 3 discrete levels for numerical factors to analyze the structured survey data.

In a real application, I found that I only had enough projects to build a linear model of the predicted expense for a given score of the twelve tri-level ordinal factors. In the sample, since I'm using fewer factors I have enough data for a higher-level model so I'm going to use it just because I can! Tip: when entering the design data, right click in the top left area of the data table and add a comments column. Or, select View > Display Columns > Comments. Put the project name in that column so that we can view it later on during the analysis phase.

In some real applications, there were some areas of the modeled space which had almost no data. But that is actually not a problem at all if we think critically about it. Since future projects will most likely look like projects that have been done in the past, there should be few projects that are in areas of the design space that don't have any data. Figure 2 shows one area of the model that illogically would cost less than zero dollars. This area has no actual data and illustrates this point.

Being aware of this limitation is why we implemented some of the process steps in step 5 around our understanding of the limitations of this tool. Figure 2 also shows the power of the crosshairs window view. It is showing that in the current area, the ballpark project cost is ~$6.5M, with a 95% CI Low of $3.5M and 95% CI High of $9.4M. That's a fairly wide range, but it's good enough to start a conversation about whether the project is worth investing in.

Figure 3. RSM screenshot of the sample data with cross-hairs window open from View > Show Crosshairs Window

4. Test and Add Supporting Information

Once we had the model built, we were able to compare the prediction of the model with some recent engineering project estimates—and they were freakishly accurate. We also added some additional capability when a model projection was made. We found that if you compare the product of all the products of the actual coefficient and factor scores (contrast that with the sum of products of actual coefficient and factor scores used for the Actual Equation), there was a nice correlation between the projects that all had similar scores. So we used that to do a reverse lookup and pull in which projects were similar. (Yes, there is a risk of two equally weighted factors being aliased, but we were OK with that since the use of the result is primarily subjective).

As a result, anytime someone used the model, it would spit out a projected value and a list of the projects that were the closest in terms of similar "product of product" scores. To use Design-Expert's built-in capability here, go to Display Options > Design Points > Show and the actual points you measured will show up on the interactive plots. This will also show the project name that you previously added to the comments column in the design data entry.

5. Develop Process that Supports the Intention of the Tool

In real-world use, we made sure to cover the limitations of the tool with careful consideration of its use within a process. When the tool was done, we knew what it was capable of, but also knew what it wasn't capable of. So we made sure to develop an appropriate process that supported when and where the tool was used. The tool was used early on in project chartering and market profitability analysis. We wanted to avoid ever having an engineer held responsible for a budget that came from the ballpark estimators, we only wanted to use them to reduce the number of weeks our highly talented engineers spent doing estimates that didn't require a high level of accountability.

Conclusion

With some very structured definitions of project cost driver characterization and Design-Expert software we were able to nearly eliminate the time that high-value engineers spent on early-stage project cost estimations. This enabled our product planning, strategy, and budgeting offices to speed up their early stage planning while reducing the load on our overtaxed engineers. We also built some process around the limitations of the tool to ensure that the organization wouldn't end up in a situation without clear lines of accountability. This is a great example of the many ways that DOE, RSM, and statistical methods can streamline business planning.

About the Guest Author

Nate Kaemingk is an experienced project manager, consultant, and founder of Small Business Decisions. He writes about business decision making and the unique business solutions that are possible by combining statistics with business. His focus is on providing every business decision-maker with access to clear, concise, and effective tools to help them make better decisions. For more information, visit his website linked above, or email him.

Five Keys to Increase ROI for DOE On-Site Training

A recent discussion with a client led to these questions—“How do we keep design of experiments (DOE) training “alive” so that long-term benefits can be seen? How do we ensure our employees will apply their new-found skills to positively impact the business?” In my 20+ years as a DOE consultant and trainer, I have seen many companies who invested in on-site training, only to have it die a quick death mere days after the instructor leaves. On the other hand, we have long-term relationships with clients who have fully integrated design of experiments into the very culture of their research and development, and wouldn’t consider doing it any other way. What are the keys that lead the latter to success?

Key #1: Top-Down Management Support

Management must focus on long-term results versus short-term fixes. Design of experiments is a key tool to gain a fundamental understanding of processes. When combined with basic scientific and engineering knowledge, it helps technical professionals discover the critical interactions that drive the process. It’s not free, experimentation costs time and money. But forward-thinking companies understand that the long-term gains are worth the short-term expense. Management needs to buy-in to the use of DOE as a strategic initiative for future success.

Key #2: Data-Driven Decisions

Long-term success is achieved when management insists on using data to make decisions. My first engineering role was in a company that told us “All decisions are made based on data.” Engineers were expected to collect data and bring it to the table. DOE was one of the preferred methods to collect and analyze data to make those decisions. Key #2 is ingraining the expectation into the business that data-driven results will benefit the company longer than gut-feel decisions.

Key #3: Peer-to-Peer Learning

People like to learn from each other. Training can be sustained by learning from DOE’s done by peers. One way to support this is to plan monthly “lunch and learn” sessions. Everyone brings their own lunch (or order pizza!) and have 2-3 people do informal presentations of either an experiment recently completed, or their proposed plan for a future experiment. If the experiment is completed, review the data analysis, lessons learned, and future plans. If it is a proposed DOE plan, discuss potential barriers and roadblocks, and then brainstorm options for solving them. The entire session should be run in an open and educational atmosphere, with the focus on learning from each other. This key demonstrates the practical application of DOE and inherently encourages others to try it.

Key #4: Practice, Practice, Practice

Company management should plan that the output of on-site training is a specific project to apply DOE. Teams should plan an experiment that can be run as soon as possible to reinforce the concepts learned. As DOE’s are completed, the data can be shared with classmates simply to provide everyone with some practice datasets. The mantra “use it or lose it” is very true with data analysis skills and setting aside some time to get together and review company data will go a long way towards reinforcing the skills recently learned. Schedule a follow-up webinar with the instructor if more guidance is needed.

Key #5: Local Champions

There are always a couple of people who gravitate naturally towards data analysis. These people just seem to “get it”. Invest in those people by providing them with additional training so that they can become in-house mentors for others. This builds their professional reputation and creates a positive, driving force within the company for sustainability.

Summary

The investment in on-site training should include a company plan to sustain the education long-term. Good management support is an essential start, establishing expectations on using design of experiments and other statistical tools. Employees should then be connected with champions, followed by opportunities to apply DOE’s and share practical learning experiences with their peers.

Adding Intervals to Optimization Graphs

Design-Expert® software provides powerful features to add confidence, prediction, or tolerance intervals to its graphical optimization plots. All users can benefit by seeing how this provides a more conservative ‘sweet spot’. However, this innovative enhancement is of particular value for those in the pharmaceutical industry who hope to satisfy the US FDA’s QbD (quality by design) requirements.

Here are the definitions:

Confidence Interval (CI): an interval that covers a population parameter (like a mean) with a pre-determined confidence level (such as 95%.)

Prediction Interval (PI): an interval that covers a future outcome from the same population with a pre-determined confidence level.

Tolerance Interval (TI): an interval that covers a fixed proportion of outcomes from the population with a pre-determined confidence level for estimating the population mean and standard deviation. (For example, 99% of the product will be in spec with 95% confidence.)

Note that a confidence interval contains a parameter (σ, μ, ρ, etc.) with “1-alpha” confidence, while a tolerance interval contains a fixed proportion of a population with “1-alpha” confidence.

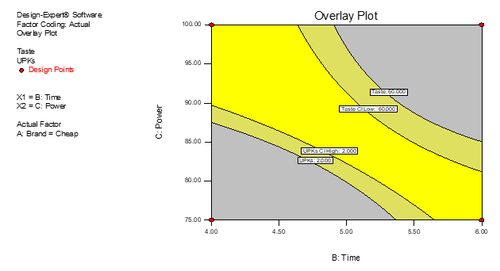

These intervals are displayed numerically under Point Prediction as shown in Figure 1. They can be added as interval bands in graphical optimization, as shown in Figure 2. (Data is taken from our microwave popcorn DOE case, available upon request.) This pictorial representation is great for QbD purposes because it helps focus the experimenter on the region where they are most likely to get consistent production results. The confidence levels (alpha value) and population proportion can be changed under the Edit Preferences option.

Choosing the Best Design for Process Optimization

Ever wonder what the difference is between the various response surface method (RSM) optimization design options? To help you choose the best design for your experiment, I’ve put together a list of things you should know about each of the three primary response surface designs—Central Composite, Box-Behnken, and Optimal.

Central Composite Design (CCD)

- Developed for estimating a quadratic model

- Created from a two-level factorial design, and augmented with center points and axial points

- Relatively insensitive to missing data

- Features five levels for each factor (Note: The number of levels can be reduced by choosing alpha=1.0, a face-centered CCD which has only three levels for each factor.)

- Provides excellent prediction capability near the center (bullseye) of the design space

Box-Behnken Design (BBD)

- Created for estimating a quadratic model

- Requires only three levels for each factor

- Requires specific positioning of design points

- Provides strong coefficient estimates near the center of the design space, but falls short at the corners of the cube (no design points there)

- BBD vs CCD: If you end up missing any runs, the accuracy of the remaining runs in the BBD becomes critical to the dependability of the model, so go with the more robust CCD if you often lose runs or mismeasure responses.

Optimal Design

- Customize for fitting a linear, quadratic or cubic model (Note: In Design-Expert® software you can change the user preferences to get up to a 6th order model.)

- Produce many levels when augmented as suggested by Design-Expert, but these can be limited by choosing the discrete factor option

- Design points are positioned mathematically according to the number of factors and the desired model, therefore the points are not at any specific positions—they are simply spread out in the design space to meet the optimality criteria, particularly when using the coordinate exchange algorithm

- The default optimality for “I” chooses points to minimize the integral of the prediction variance across the design space, thus providing good response estimation throughout the experimental region.

- Other comments: If you have knowledge of the subject matter, you can edit the desired model by removing the terms that you know are not significant or can't exist. This will decrease the required number of runs. Also, you can also add constraints to your design space, for instance to exclude particular factor combinations that must be avoided, e.g., high-temperature and high time for cooking.

For an in-depth exploration of both factorial and response surface methods, attend Stat-Ease’s Modern DOE for Process Optimization workshop.

Shari Kraber

shari@statease.com