Stat-Ease Blog

Categories

Improving Your Predictive Model via a Response Transformation

A good predictive model must exhibit overall significance and, ideally, insignificant lack of fit plus high adjusted and predicted R-squared values. Furthermore, to ensure statistical validity (e.g., normality, constant variance) the model’s residuals must pass a series of diagnostic tests (fortunately made easy by Stat-Ease software):

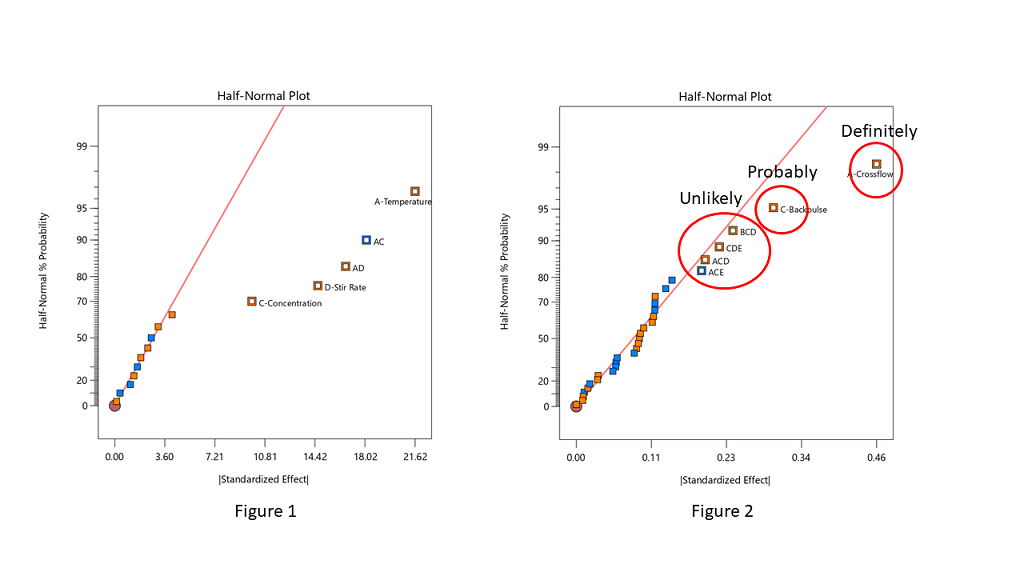

- Normal plot of residuals illustrates a relatively straight line. If you can cover the residuals with a fat pencil, no worries, but watch out for a pronounced S-shaped curve such as Figure 1 exhibits.

- Residuals-versus-predicted plot has points scattered randomly, i.e., demonstrating a constant variance from left to right. Beware of a “megaphone” shape as seen in Figure 2.

- Residuals-versus-run plot exhibiting no trends, shifts or outliers (points outside the red lines such as seen in Figure 3).

When diagnostic plots of residuals do not pass the tests, the first thing you should consider for a remedy is a response transformation, e.g., rescaling the data via a natural log (again made easy by Stat-Ease software). Then re-fit the model and re-check the diagnostic plots. Often you will see improvements in both the statistics and the plots of residuals.

The Box-Cox plot (see Figure 4) makes the choice of transformation very simple. Based on the fitted model, this diagnostic displays a comparable measure of residuals against a range of power transformations, e.g., taking the inverse of all your responses (lambda -1), or squaring them all (lambda 2). Obviously, the lower the residuals the better. However, only go for a transformation if your current responses at the power of 1 (the blue line), fall outside the red-lined confidence interval, such as Figure 4 display. Then, rather than going to the exact-optimal power (green line), select one that will be simpler (and easier to explain)--the log transformation in this case (conveniently recommended by Stat-Ease software).

See the improvement made by the log transformation in the diagnostics (Figures 5, 6 and 7). All good!

In conclusion, before pressing ahead with any model (or abandoning it), always check the residual diagnostics. If you see any strange patterns, consider a response transformation, particularly if advised to do so by the Box-Cox plot. Then confirm the diagnostics after re-fitting the model.

For more details on diagnostics and transformations see How to Use Graphs to Diagnose and Deal with Bad Experimental Data.

Good luck with your modeling!

~ Shari Kraber, shari@statease.com

R-Squared Mysteries Solved

Design of experiments (DOE) and the resulting data analysis yields a prediction equation plus a variety of summary statistics. A set of R-squared values are commonly used to determine the goodness of model fit. In this blog, I peel back the raw versus adjusted versus predicted R-squared and explain how each can be interpreted, along with the relationships between them. The calculations of these values can be easily found online, so I won’t spend time on that, focusing instead on practical interpretations and tips.

Raw R-squared measures the fraction of variation explained by the fitted predictive model. This is a good statistic for comparing models that all have the same number of terms (like comparing models consisting of A+B versus A+C). The downfall of this statistic is that it can be artificially increased simply by adding more terms to the model, even ones that are not statistically significant. For example, notice in Table 1 from an optimization experiment how R-squared increases as the model steps up in order from linear to two-factor interaction (2FI), quadratic and, finally, cubic (disregarding it being aliased).

The “adjusted” R-squared statistic corrects this ‘inflation’ by penalizing terms that do not add statistical value. Thus, the adjusted R-squared statistic generally levels off (at 0.8881 in this case) and then begins to decrease at some point as seen in Table 1 for the cubic model (0.8396). The adjusted R-squared value cannot be inflated by including too many model terms. Therefore, you should report this measure of model fit, not the raw R-squared.

The “predicted” R-squared is most rigorous for assessing model fit, so much so that it often starts off negative at the linear order, as it does for the example in Table 1 (-0.4682). As you can see, this statistic improves greatly as significant terms are added to the model, and quickly decreases once non-significant terms are added, e.g., going negative again at cubic. If predicted R-squared goes negative, the model becomes worse than nothing, that is, simply taking the average of the data (a “mean” model)—that is not good!

Figures 1 illustrates how the predicted R-squared peaks at the quadratic model for the example. Once a model emerges at the highest adjusted and/or predicted R-squared, consider taking out any insignificant terms—best done with the aid of a computerized reduction algorithm. This often produces a big increase in the predicted R-squared.

Conclusion

The goal of modeling data is to correctly identify the terms that explain the relationship between the factors and the response. Use the adjusted R-squared and predicted R-squared values to evaluate how well the model is working, not the raw R-squared.

PS: You’ve likely been reading this expecting to find recommended adjusted and predicted R-squared values. I will not be providing this. Higher values indicate that more variation in the data or in predictions is explained by the model. How you use the model dictates the threshold that is acceptable to you. If the DOE goal is screening, low values can be acceptable. Remember that low R-squared values do not invalidate significant p-values. In other words, if you discover factors that have strong effects on the response, that is positive information, even if the model doesn’t predict well. A low predicted R-squared means that there is more unexplained variation in the system, and you have more work to do!

Why it pays to be skeptical of three-factor-interaction effects

Quite often, when providing statistical help for Stat-Ease software users, our consulting team sees an over-selection of effects from two-level factorial experiments. Generally, the line gets crossed when picking three-factor interactions (3FI), as I documented in the lead article for the June 2007 Stat-Teaser. In this case, the experimenter picked all the estimable effects when only one main effect (factor B) really stood out on the Pareto plot. Check it out!

In my experience, the true 3FIs emerge only when one of the variables is categorical with a very strong contrast. For example, early in my career as an R&D chemical engineer with General Mills, I developed a continuous process for hydrogenating a vegetable oil. By cranking up the pressure and temperature and using an expensive, noble-metal catalyst (palladium on a fixed bed of carbon), this new approach increased the throughput tremendously over the old batch process, which deployed powered nickel to facilitate the reaction. When setting up my factorial experiment, our engineering team knew better than to make the type of reactor one of the inputs, because being so different, this would generate many complications of time-temperature interactions differing from on process to the other. In cases like this, you are far better off doing separate optimizations and then seeing which process wins out in the end. (Unfortunately for me, I lost this battle due to the color bodies in the oil poisoning my costly catalyst.)

A response must really behave radically to require a 3FI for modeling as illustrated hypothetically in Figures 1 versus 2 for two factors—catalyst level (B) and temperature (D)—as a function of a third variable (E)—the atmosphere in the reactor.

Figures 1 & 2: 3FI (BDE) surface with atmosphere of nitrogen vs air (Factor E at low & high levels)

These surfaces ‘flip-flop’ completely like a bird in flight. Although factor E being categorical does lead to a strong possibility of complex behavior from this experiment, the dramatic shift caused by it changing from one level to the other would be highly unusual by my reckoning.

It turns out that there is a middle ground with factorial models that obviates the need for third-order terms: Multiple two-factor interactions (2FIs) that share common factors. The actual predictive model, derived from a case study we present in our Modern DOE for Process Optimization workshop, is:

Yield = 63.38 + 9.88*B + 5.25*D − 3.00*E + 6.75*BD − 5.38*DE

Notice that this equation features two 2FIs, BD and DE, that share a common factor (D). This causes the dynamic behavior shown in Figures 3 and 4 without the need for 3FI terms.

Figure 3 & 4: 2FI surface (BD) for atmosphere of nitrogen vs air (Factor E at low & high levels)

This simpler model sufficed to see that it would be best to blanket the batch reactor with nitrogen, that is, do not leave the hatch open to the air—a happy ending.

Conclusion

If it seems from graphical or other methods of effect selection that 3FI(s) should be included in your factorial model, be on guard for:

- Over-selection of effects (my first case)

- The need for a transformation (such as log): Be sure to check the Box-Cox plot (always!).

- Outlier(s) in your response (look over the diagnostic plots, especially the residual versus run).

- A combination of these and other issues—ask stathelp@statease.com for guidance if you use Stat-Ease software (send in the file, please).

I never say “never”, so if you really do find a 3FI, get back to me directly.

-Mark (mark@statease.com)

2021 Online DOE Summit

Stat-Ease is hosting an amazing group of speakers for the 2021 Online DOE Summit. Taking place on September 28 & 29, this conference will be accessible to technical professionals around the globe. Register to attend the live in-person sessions via GotoWebinar, or plan to watch the on-demand recordings after the conference. Registration is free of charge.

Here is the line-up:

Speakers - September 28:

(Time 10am - 2pm US Central Time)

Hour 1: (Keynote) Martin Bezener (President & Chief Technical Officer, Stat-Ease)

Stat-Ease 360 Reveal

Hour 2: Gregory Hutto (Wing Operations Analyst, US Air Force)

The Tooth Fairy in Experimental Design: White Lies We Tell Our Software

Hour 3: Drew Landman (Professor & Associate Chair, Dept of Mech & Aero Engr, Old Dominion University)

An I-Optimal Split-Plot Design for eVTOL Tilt-Rotor Performance Characterization

Hour 4: Jason Pandolfo (Research Scientist, Quaker Chemical)

Using Logistic Regression to Predict the Stability of Metalworking Fluid Emulsions in Varying Water Quality Conditions

Speakers - September 29:

(Time 10am - 2pm US Central Time)

Hour 1: (Keynote) Hank Anderson (VP Software Development, Stat-Ease)

Python Integration with Stat-Ease 360 - A Tutorial

Hour 2: Oliver Thunich (Statistics Consultant, STATCON GmbH)

Adjusting a DOE to Unpredictable Circumstances

Hour 3: Steven Mullen (Senior Scientist, Cook Medical)

Improving Process Understanding of an IVF Cell Culture Incubator via Response Surface Methodology

Hour 4: Gregory Perrine (Research Scientist, Georgia-Pacific)

Debonder Formulation Optimization Using a KCV Mixture/Process Design in Paper Handsheets

We look forward to seeing you!

Please send any questions to Shari@statease.com.

Avoiding Over-Selection of Effects on the Half-Normal Plot

One of the greatest tools developed by Cuthbert Daniel (1976), was the use of the half-normal plot to visually select effects for two-level factorials. Since these designs generally contain no replicates, there is no pure error to use as the base for statistical F-tests. The half-normal plot allows us to visually distinguish between the effects that are small (and normally distributed) versus large (and likely to be statistically significant). The subsequent ANOVA is built on this decision to split the effects into the few that are likely “signal” versus the majority that are likely “noise”.

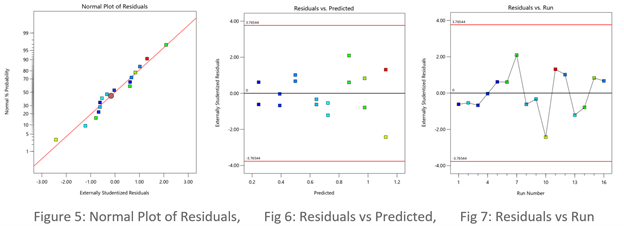

Often the split between the groups is obvious, with a clear gap between them (see Figure 1), but sometimes it is more ambiguous and harder to decide where to “draw the line” (see Figure 2).

Stat-Ease consultants recommend staying conservative when deciding which effects to designate as the “signal”, and to be cautious about over-selecting effects. In Figure 2, the A effect is clearly different from the other effects and should definitely be selected. The C effect is also separated from the other effects by a “gap” and is probably different, so it should also be included in the potential model terms. The next grouping consists of four three-factor interactions (3FI’s.) Extreme caution should be exercised here – 3FI terms are very rare in most production and research settings. Also, they fall “on the line”, which indicates that they are most likely within the normal probability curve that contains the insignificant effects. These terms should be pooled together to estimate the error of the system. The conservative approach says that choosing A and C for the model is best. Adding any other terms is most likely just chasing noise.

Hints for choosing effects:

- Split the effects into two groups, distinguishing between the “big” and “small” ones, right versus left, respectively

- Start from the right side of the graph – that is where the biggest effects are

- Look for gaps that separate big effects from the rest of the group

- STOP if you select a 3FI term – these are very unlikely to be real effects (throw them back into the error pool)

- Don’t skip a term – if a smaller effect looks like it could be significant, then all larger effects also must be included

- Effects need to “jump off” the line – otherwise they are just part of the normal distribution

Sometimes you have to simply accept that the changes in the factor levels did not trigger a change in the response that was larger than the normal process variation (Figure 3). Note in Figure 3 that the far right points are straddling the straight line. These terms have virtually the same size effect – don’t select the lower one just because it is below the line.

When you are lucky enough to have replicates, the pure error is then used to help position green triangles on the half-normal plot. The triangles span the amount of error in the system. If they go out farther than the biggest effect, that is a clear indication that there are no effects that are larger than the normal process variation. No effects are significant in this case (see Figure 4).

Conclusion

The half-normal plot of effects gives us a visual tool to split our effects into two groups. However, the use of the tool is a bit of an art, rather than an exact science. Combine this visual tool with both the ANOVA p-values and, most importantly, your own subject matter knowledge, to determine which effects you want to put into the final prediction model.