Stat-Ease Blog

Categories

Greg's DOE Adventure: Important Statistical Concepts behind DOE

If you read my previous post, you will remember that design of experiments (DOE) is a systematic method used to find cause and effect. That systematic method includes a lot of (frightening music here!) statistics.

[I’ll be honest here. I was a biology major in college. I was forced to take a statistics course or two. I didn’t really understand why I had to take it. I also didn’t understand what was being taught. I know a lot of others who didn’t understand it as well. But it’s now starting to come into focus.]

Before getting into the concepts of DOE, we must get into the basic concepts of statistics (as they relate to DOE).

Basic Statistical Concepts:

Variability

In an experiment or process, you have inputs you control, the output you measure, and uncontrollable factors that influence the process (things like humidity). These uncontrollable factors (along with other things like sampling differences and measurement error) are what lead to variation in your results.

Mean/Average

We all pretty much know what this is right? Add up all your scores, divide by the number of scores, and you have the average score.

Normal distribution

Also known as a bell curve due to its shape. The peak of the curve is the average, and then it tails off to the left and right.

Variance

Variance is a measure of the variability in a system (see above). Let’s say you have a bunch of data points for an experiment. You can find the average of those points (above). For each data point subtract that average (so you see how far away each piece of data is away from the average). Then square that. Why? That way you get rid of the negative numbers; we only want positive numbers. Why? Because the next step is to add them all up, and you want a sum of all the differences without negative numbers getting in the way. Now divide that number by the number of data points you started with. You are essentially taking an average of the squares of the differences from the mean.

That is your variance. Summarized by the following equation:

In this equation:

Yi is a data point

Ȳ is the average of all the data points

n is the number of data points

Standard Deviation

Take the square root of the variance. The variance is the average of the squares of the differences from the mean. Now you are taking the square root of that number to get back to the original units. One item I just found out: even though standard deviations are in the original units, you can’t add and subtract them. You have to keep it as variance (s2), do your math, then convert back.

Greg's DOE Adventure: What is Design of Experiments (DOE)?

Hi there. I’m Greg. I’m starting a trip. This is an educational journey through the concept of design of experiments (DOE). I’m doing this to better understand the company I work for (Stat-Ease), the product we create (Design-Expert® software), and the people we sell it to (industrial experimenters). I will be learning as much as I can on this topic, then I’ll write about it. So, hopefully, you can learn along with me. If you have any comments or questions, please feel free to comment at the bottom.

So, off we go. First things first.

What exactly is design of experiments (DOE)?

When I first decided to do this, I went to Wikipedia to see what they said about DOE. No help there.

“The design of experiments (DOE, DOX, or experimental design) is the design of any task that aims to describe or explain the variation of information under conditions that are hypothesized to reflect the variation.” –Wikipedia

The what now?

That’s not what I would call a clearly conveyed message. After some more research, I have compiled this ‘definition’ of DOE:

Design of experiments (DOE), at its core, is a systematic method used to find cause-and-effect relationships. So, as you are running a process, DOE determines how changes in the inputs to that process change the output.

Obviously, that works for me since I wrote it. But does it work for you?

So, conceptually I’m off and running. But why do we need ‘designed experiments’? After all, isn’t all experimentation about combining some inputs, measuring the outputs, and looking at what happened?

The key words above are ‘systematic method’. Turns out, if we stick to statistical concepts we can get a lot more out of our experiments. That is what I’m here for. Understanding these ‘concepts’ within this ‘systematic method’ and how this is advantageous.

Well, off I go on my journey!

Correlation vs. causality

Recently, Stat-Ease Founding Principal, Pat Whitcomb, was interviewed to get his thoughts on design of experiments (DOE) and industrial analytics. It was very interesting, especially to this relative newbie to DOE. One passage really jumped out at me:

“Industrial analytics is all about getting meaning from data. Data is speaking and analytics is the listening device, but you need a hearing aid to distinguish correlation from causality. According to Pat Whitcomb, design of experiments (DOE) is exactly that. ‘Even though you have tons of data, you still have unanswered questions. You need to find the drivers, and then use them to advance the process in the desired direction. You need to be able to see what is truly important and what is not,’ says Pat Whitcomb, Stat-Ease founder and DOE expert. ‘Correlations between data may lead you to assume something and lead you on a wrong path. Design of experiments is about testing if a controlled change of input makes a difference in output. The method allows you to ask questions of your process and get a scientific answer. Having established a specific causality, you have a perfect point to use data, modelling and analytics to improve, secure and optimize the process.’"

It was the line ‘distinguish correlation from causality’ that got me thinking. It’s a powerful difference, one that most people don’t understand.

As I was mulling over this topic, I got into my car to drive home and played one of the podcasts I listen to regularly. It happened to be an interview with psychologist Dr. Fjola Helgadottir and her research into social media and mental health. As you may know, there has been a lot of attention paid to depression and social media use. When she brought up the concept of correlation and causality it naturally caught my attention. (And no, let’s not get into Jung’s concept of Synchronicity and whether this was a meaningful coincidence or not.)

The interesting thing that Dr. Helgadottir brought up was the correlation between social media and depression. That correlation is misunderstood by the general population as causality. She went on to say that recent research has not shown any causality between the two but has shown that people who are depressed are more likely to use social media more than other people. So there is a correlation between social media and depression, but one does not cause the other.

So, back to Pat’s comments. The data is speaking. We all need a listening device to tell us what it’s saying. For those of you in the world of industrial experimentation, experimental design can be that device that differentiates the correlations from the causality.

Design-Expert Favorite Feature: Sharing the Magic of the Model!

The situation: You have successfully run an experiment and analyzed the data. The results include a prediction equation with a high predicted R-squared that will be useful for many purposes. How can you share this with colleagues?

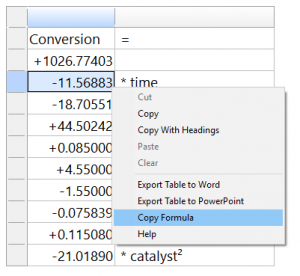

The solution: Design-Expert® software has a little-known but useful “Copy Equation” function that allows you to export the prediction equation to MS Excel so that others can use it for future work, without needing a copy of Design-Expert software. The advantage of using this function is that it brings in all the essential significant digits, including ones not showing on your screen. This accuracy is critical to getting correct predictive values.

- Go to the ANOVA tab for the response. Find the Actual Equation, located in the lower right corner by default.

- Right-click on the equation and select Copy Equation.

3. Open Excel, position your mouse and use Ctrl-V to correctly paste the formula into Excel (Ctrl-V allows the spreadsheet functionality to work.)

4. As shown in the figure (coloration added within Excel), the blue cells allow the user to enter actual factor settings. These values are used in the prediction equation, with the result showing in the yellow cell.

You can also view this process in this video.

Good luck with your experimenting!

Design of Experiments (DOE): The Secret Weapon in Medical Device Design

“Multifactor testing via design of experiments (DOE) is the secret weapon for medical-device developers,” says Stat-Ease Principal Mark Anderson, lead author of the DOE and RSM Simplified series*. “They tend to keep their success to themselves to keep their competitive edge, but one story, that we can share, documents how a multinational manufacturer doubled their production rate while halving the variation of critical-to-quality performance. That’s powerful stuff!”

The case study that Mark is referring to can be found here: RSM for Med Devices.

Stat-Ease has guided many manufacturers in the medical device industry in the streamlining of their products and processes. Now, we have put that experience together into one place, the Designed Experiments for Medical Devices workshop.

In this one-of-a-kind workshop, learn how to optimize your medical device or process. Our DOE experts will show you how to use Design-Expert® software to help you save money or time while ensuring the quality of your product. We welcome scientists, engineers, and technical professionals working in this field, as well as organizations and institutions that devote most of their efforts to research, development, technology transfer, or commercialization of medical devices. Throughout this course you will get hands-on experience while using cases that come directly from this industry.

During a fast-paced two days, explore the use of fractional factorial designs for the screening and characterization of products or processes. Also see how to achieve top performance via response surface designs and multiple response optimization. Practice applying all these DOE tools while working through cases involving medical device design, pacemaker lead stress testing, and typical processes that may be used in the testing or production of these devices such as seal strength, soldering, dimensional analysis, and more.

For dates, location, cost, and more information about this workshop, visit: www.statease.com/training/workshops/class-demd-adin.html

About Stat-Ease and DOE

Based in Minneapolis, Stat-Ease has been a leading provider of design of experiments (DOE) software, training, and consulting since its founding in 1982. Using these powerful statistical tools, industrial experimenters can greatly accelerate the pace of process and product development, manufacturing troubleshooting and overall quality improvement. Via its multifactor testing methods, DOE quickly leads users to the elusive sweet spot where all requirements are met at minimal cost. The key to DOE is that by varying many factors, not just one, simultaneously, it enables breakthrough discoveries of previously unknown synergisms.

* DOE Simplified is a comprehensive introductory text on design of experiments

RSM Simplified is a simple and straight forward approach to response surface methods