Stat-Ease Blog

Categories

Are optimal response surface method (RSM) designs always the optimal choice?

Most people who have been exposed to design of experiment (DOE) concepts have probably heard of factorial designs—designs that target the discovery of factor and interaction effects on their process. But factorial designs are hardly the only tool in the shed. And oftentimes to properly optimize our system a more advanced response surface design (RSM) will prove to be beneficial, or even essential.

This is the case when there is “curvature” within the design space, suggesting that quadratic (or higher) order terms are needed to make valid predictions between the extreme high/low process factor settings. This gives us the opportunity to find optimal solutions that reside in the interior of the design space. If you include center points in a factorial design, you can check for non-linear behavior within the design space to see if an RSM design would be useful (1). But which RSM options should you pick?

Let’s start by introducing the Stat-Ease® software menu options for RSM designs. Once we understand the alternatives we can better understand when which might be most useful for any given situation and why optimal designs are great—when needed.

- First on the list is the central composite design (our software default)

- Next is the Box-Behnken design

- And third is something called optimal design

Stat-Ease software design selection options

The natural question that often pops up is this. Since optimal designs are third on our list, are we defaulting to suboptimal designs? Let’s dig in a bit deeper.

The central composite design (“CCD”) has traditionally been the workhorse of response surface methods. It has a predictable structure (5 levels for each factor). It is robust to some variations in the actual factor settings, meaning that you will still get decent quadratic model fits even if the axial runs have to be tweaked to achieve some practical values, including the extreme case when the axial points are placed at the face of the factorial “cube” making the design a 3-level study. A CCD is the design of choice when it fits the problem and generally creates predictive models that are effective throughout the design space--the factorial region of the design. Note that the quadratic predictive models generally improve when the axial points reside outside the face of the factorial cube.

When a 5-level study is not practical, for example, if we are looking at catalyst levels and the lower axial point would be zero or a negative number, we may be forced to bring the axial points to the face of the factorial cube. When this happens, Box-Behnken designs would be another standard design to consider. It is a 3-level design that is laid out slightly differently than a CCD. In general, the Box-Behnken results in a design with marginally fewer runs and is generally capable of creating very useful quadratic predictive models.

These standard designs are very effective when our experiments can be performed precisely as scripted by the design template. But this is not always the case, and when it is not we will need to apply a more novel approach to create a customized DOE.

Optimal designs are “custom” creations that come in a variety of alphabet-soup flavors—I, D, A, G, etc. The idea with optimal designs is that given your design needs and run-budget, the optimization algorithm will seek out the best choice of runs to provide you with a useful predictive model that is as effective as possible. Use of the system defaults when creating optimal designs is highly advised. Custom optimal designs often have fewer runs than the central composite option. Because they are generated by a computer algorithm, the number of levels per factor and the positioning of the points in the design space may be unique each time the design is built. This may make newcomers to optimal designs a bit uneasy. But, optimal designs fill the gap when:

- The design space is not “cuboidal”— there are constraints on the operating region that make the design space lopsided or truncated.

- There are categoric or discrete numeric factors to deal with.

- The expected polynomial model is something other than a full quadratic.

- You are trying to augment an existing design to expand the design space or to upgrade to a higher order model.

The classic designs provide simple and robust solutions and should always be considered first when planning an experiment. However, when these designs don’t work well because of budget or practical design space constraints, don’t be afraid to go “outside the box” and explore your other options. The goal is to choose a design that fits the problem!

Acknowledgement: This post is an update of an article by Shari Kraber on “Modern Alternatives to Traditional Designs Modern Alternatives to Traditional Designs" published in the April 2011 STATeaser.

(1) See Shari Kraber’s blog post, “"Energize Two-Level Factorials - Add Center Points!” from August. 23, 2018 for additional insights.

January Publication Roundup

Here's the latest Publication Roundup! In these monthly posts, we'll feature recent papers that cited Design-Expert® or Stat-Ease® 360 software. Please submit your paper to us if you haven't seen it featured yet!

Featured Article

Quantification of Menthol by Chromatography Applying Analytical Quality by Design

Chromatographia, Published: 17 January 2026

Authors: Santiago Puentes, Julian Puentes, Ronald Andrés Jiménez & Emerson León Ávila

Mark's comments: Well done deploying the Ishikawa (fishbone) diagram to consider all the factors that might affect the analytical method. This is a great application of a multifactor approach to achieve quality by design.

Be sure to check out this important study, and the other research listed below!

More new publications from January

- UHPLC Method Development for the Trace Level Quantification of Anastrozole and Its Process-Related Impurities Using Design of Experiments

Biomedical Chromatography, Volume 40, Issue 2 February 2026 e70279

Authors: Narasimha Rao Tadisetty, Ramesh Yanamandra, Subhalaxmi Pradhan, Gitanjali Pradhan - Combinatorial Anti-Mitotic Activity of Loratadine/5-Fluorouracil Loaded Zein Tannic Acid Nanoparticles in Breast Cancer Therapy: In silico, in vitro and Cell Studies

International Journal of Nanomedicine, 2026; 21:1-27

Authors: Mohamed Hamdi, Moawia M Al-Tabakha, Isra H Ali, Islam A Khalil - Synergistic effects and optimization of palm oil fuel ash and jute fiber in sustainable concrete

Scientific Reports volume 16, Article number: 259 (2026)

Authors: Muhammad Umer, Nawab Sameer Zada, Paul O. Awoyera, Muhammad Basit Khan, Wisal Ahmed, Olaolu George Fadugba - Harnessing sawdust for clean energy: production and optimization of bioethanol in Nigeria

MOJ Ecology & Environmental Sciences, 2026; 11(1):5‒11.

Authors: Ifeoma Juliet Opara, Eno-obong Sunday Nicholas, Ezekiel Adi Siman - Antibiotic-phytochemical combinations against Enterococcus faecalis: a therapeutic strategy optimized using response surface methodology

Antonie van Leeuwenhoek, 119, 41 (2026)

Authors: Monikankana Dasgupta, Alakesh Maity, Ranojit Kumar Sarker, Payel Paul, Poulomi Chakraborty, Sarita Sarkar, Ritwik Roy, Moumita Malik, Sharmistha Das & Prosun Tribedi - Breakthrough RP-HPLC strategy for synchronous analysis of pyridine and its degradation products in powder for injection using quality metrics

Scientific Reports volume 16, Article number: 1344 (2026)

Authors: Asma S. Al‐Wasidi, Noha S. katamesh, Fahad M. Alminderej, Sayed M. Saleh, Hoda A. Ahmed, Mahmoud A. Mohamed

December Publication Roundup

Here's the latest Publication Roundup! In these monthly posts, we'll feature recent papers that cited Design-Expert® or Stat-Ease® 360 software. Please submit your paper to us if you haven't seen it featured yet!

Featured Article

Sustainable protection for mild steel (S235): Anti-corrosion effectiveness of natural Paraberlinia bifoliolata barks extracts in HCl 1 M

Hybrid Advances, Volume 11, December 2025, 100526

Authors: Liliane Nga, Benoit Ndiwe, Jean Jalin Eyinga Biwolé, Armand Zébazé Ndongmo, Achille Desiré Omgba Béténé, Yvan Sandy Nké Ayinda, Joseph Zobo Mfomo, Cesar Segovia, Antonio Pizzi, Achille Bernard Biwolé

Mark's comments: I like this for its application of response surface methods to thoroughly explore three process factors for optimization of a 'green' corrosion inhibitor. They then provide a scientific explanation of its "remarkable effectiveness."

Be sure to check out this important study, and the other research listed below!

More new publications from December

- Sustainable synthesis of Mangenese cobalt oxide nanocomposite on natural clay and optimization using response surface methodology for ciprofloxacin degradation via peroxymonosulfate activation

Applied Surface Science, Volume 712, 7 December 2025, 164136

Authors: Amira Hrichi, Nesrine Abderrahim, Hédi Ben Amor, Marta Pazos, Maria Angeles Sanromán - Optimization of fermentation conditions for lactic acid production by Lactiplantibacillus plantarum OM510300 using plantain peduncles as substrate

Scientific African, Volume 30, December 2025, e02998

Authors: Oluwafemi Adebayo Oyewole, Japhet Gaius Yakubu, Nofisat Olaide Oyedokun, Konjerimam Ishaku Chimbekujwo, Priscila Yetu Tsado, Abdullah Albaqami - QbD-Based HPLC Method Development for Trace-Level Detection of Genotoxic 3-Methylbenzyl Chloride in Meclizine HCl Formulations

Biomedical Chromatography, Volume 39, Issue 12, December 2025, e70238

Authors: Naga Kranthi Kumar Chintalapudi, Naresh Podila, Vijay Kumar Chollety - Multi-objective intelligent optimization design method for mix proportions of hydraulic asphalt concrete facings

Ain Shams Engineering Journal, Volume 16, Issue 12, December 2025, 103781

Hanye Xiong, Zhenzhong Shen, Yiqing Sun, Yaxin Feng, Hongwei Zhang - Sustainable pretreatment and adsorption of chemical oxygen demand from car wash wastewater using Noug sawdust activated carbon Scientific Reports volume 15, Article number: 42464 (2025)

Authors: Getasew Yirdaw, Abraham Teym, Wolde Melese Ayele, Mengesha Genet, Ahmed Fentaw Ahmed, Assefa Andargie Kassa, Tilahun Degu Tsega, Chalachew Abiyu Ayalew, Getaneh Atikilt Yemata, Tesfaneh Shimels, Rahel Mulatie Anteneh, Abathun Temesgen, Gashaw Melkie Bayeh, Almaw Genet Yeshiwas, Habitamu Mekonen, Berhanu Abebaw Mekonnen, Meron Asmamaw Alemayehu, Sintayehu Simie Tsega, Zeamanuel Anteneh Yigzaw, Amare Genetu Ejigu, Wondimnew Desalegn Addis, Birhanemaskal Malkamu, Kalaab Esubalew Sharew, Daniel Adane, Chalachew Yenew - Ternary phase optimized indomethacin nanoemulsion hydrogel for sustained topical delivery and improved biological efficacy

Scientific Reports volume 15, Article number: 43322 (2025)

Authors: K. R. Nithin, G. M. Pallavi, K. S. Srikruthi, Kasim Sakran Abass, Nimbagal Raghavendra Naveen - Numerical and experimental investigation of CI engine parameters using palm biodiesel with diesel blends

Scientific Reports volume 15, Article number: 43399 (2025)

Authors: B. Musthafa, M. Prabhahar - Development a practical method for calculation of the block volume and block surface in a fractured rock mass

Scientific Reports volume 15, Article number: 44068 (2025)

Authors: Alireza Shahbazi, Ali Saeidi, Alain Rouleau, Romain Chesnaux - Biofabrication, statistical optimization, and characterization of collagen nanoparticles synthesized via Streptomyces cell-free system for cancer therapy

Scientific Reports volume 15, Article number: 43835 (2025)

Authors: Noura El-Ahmady El-Naggar, Shaimaa Elyamny, Ahmad G. Shitifa, Asmaa Atallah El-Sawah

November Publication Roundup

Here's the latest Publication Roundup! In these monthly posts, we'll feature recent papers that cited Design-Expert® or Stat-Ease® 360 software. Please submit your paper to us if you haven't seen it featured yet!

Featured Article

Lipid nanocapsule-chitosan and iota-carrageenan hydrogel composite for sustained hydrophobic drug delivery

Scientific Reports volume 15, Article number: 42349 (November 27, 2025)

Authors: Grady K. Mukubwa, Justin B. Safari, Zikhona N. Tetana, Caroline N. Jones, Roderick B. Walker, Rui W. M. Krause

Mark's comments: This is an outstanding application of mixture design for optimal formulation of a novel composite system for enhancing oral delivery of hydrophilic antiviral drugs--a very worthy cause.

Be sure to check out this important study, and the other research listed below!

More new publications from November

- Design and optimization of lamivudine-loaded nanostructured lipid carriers: Improved lipid screening for effective drug delivery

Journal of Drug Delivery Science and Technology, Volume 113, November 2025, 107349

Authors: Hüsniye Hande Aydın, Esra Karataş, Zeynep Şenyiğit, Hatice Yeşim Karasulu - Improving the efficacy and targeting of carvedilol for the management of diabetes-accelerated atherosclerosis: An in vitro and in vivo assessment

European Journal of Pharmacology, Volume 1006, 5 November 2025, 178134

Authors: Marwa M. Nagib, Ala Hussain Haider, Amr Gamal Fouad, Sherif Faysal Abdelfattah Khalil, Amany Belal, Fahad H. Baali, Nisreen Khalid Aref Albezrah, Alaa Ismail, Fatma I. Abo El-Ela - Evaluating the bioavailability and therapeutic efficacy of nintedanib-loaded novasomes as a therapy for non-small cell lung cancer

Journal of Pharmaceutical Sciences, Volume 114, Issue 11, 103998, November 2025

Authors: Tamer M. Mahmoud, Amr Gamal Fouad, Amany Belal, Alaa Ismail, Fahad H. Baali, Mohammed S Alharthi, Ahmed H.E. Hassan, Eun Joo Roh, Alaa S. Tulbah, Fatma I. Abo El-Ela - Process optimization and quality assessment of redistilled Ethiopian traditional spirit (Areke): ethanol yield and zinc contamination control

Scientific Reports volume 15, Article number: 39187 (November 10, 2025)

Authors: Estifanos Kassahun, Abdiwak Tamene, Yobsen Tadesse, Bethlehem Semagn, Addisu Sewunet, Shiferaw Ayalneh, Solomon Tibebu - Box–Behnken design approach for optimizing the removal of non-steroidal anti-inflammatory drugs from environmental water samples using magnetic nanocomposite

Scientific Reports volume 15, Article number: 40223 (November 17, 2025)

Authors: Chuanyong Yan, Ying Zhang, Li Feng - Influence of process parameters on single-cell oil production by Cutaneotrichosporon oleaginosus using response surface methodology

Biotechnology for Biofuels and Bioproducts, Volume 18, article number 115 (19 November 2025)

Authors: Max Schneider, Felix Melcher, Robert Fimmen, Johannes Mertens, Daniel Garbe, Michael Paper, Marion Ringel, Thomas Brück - Rheology, strength and durability performance of bentonite-enhanced high-performance concrete

Scientific Reports volume 15, Article number: 40487 (November 18, 2025)

Authors: M. Achyutha Kumar Reddy, Veerendrakumar C. Khed, Naga Chaitanya Kavuri - Surface-engineered hyaluronic acid-coated lyotropic liquid crystalline nanoparticles for CD44-targeting of 3-Acetyl-11-keto-β-boswellic acid in rheumatoid arthritis treatment

Journal of Nanobiotechnology, Volume 23, article number 725, (20 November 2025)

Authors: Sakshi Priya, Vagesh Verma, Aniruddha Roy, Gautam Singhvi - Synergistic enzyme action boosts phenolic compounds in flaxseed during germination using a two-level factorial design

Scientific Reports volume 15, Article number: 40384 (November 18, 2025)

Authors: Amal Z. Barakat, Azza M. Abdel-Aty, Hala A. Salah, Roqaya I. Bassuiny, Saleh A. Mohamed

Tips and tricks for designing statistically optimal experiments

Like the blog? Never miss a post - sign up for our blog post mailing list.

A fellow chemical engineer recently asked our StatHelp team about setting up a response surface method (RSM) process optimization aimed at establishing the boundaries of his system and finding the peak of performance. He had been going with the Stat-Ease software default of I-optimality for custom RSM designs. However, it seemed to him that this optimality “focuses more on the extremes” than modified distance or distance.

My short answer, published in our September-October 2025 DOE FAQ Alert, is that I do not completely agree that I-optimality tends to be too extreme. It actually does a lot better at putting points in the interior than D-optimality as shown in Figure 2 of "Practical Aspects for Designing Statistically Optimal Experiments." For that reason, Stat-Ease software defaults to I-optimal design for optimization and D-optimal for screening (process factorials or extreme-vertices mixture).

I also advised this engineer to keep in mind that, if users go along with the I-optimality recommended for custom RSM designs and keep the 5 lack-of-fit points added by default using a distance-based algorithm, they achieve an outstanding combination of ideally located model points plus other points that fill in the gaps.

For a more comprehensive answer, I will now illustrate via a simple two-factor case how the choice of optimality parameters in Stat-Ease software affects the layout of design points. I will finish up with a tip for creating custom RSM designs that may be more practical than ones created by the software strictly based on optimality.

An illustrative case

To explore options for optimal design, I rebuilt the two-factor multilinearly constrained “Reactive Extrusion” data provided via Stat-Ease program Help to accompany the software’s Optimal Design tutorial via three options for the criteria: I vs D vs modified distance. (Stat-Ease software offers other options, but these three provided a good array to address the user’s question.)

For my first round of designs, I specified coordinate exchange for point selection aimed at fitting a quadratic model. (The default option tries both coordinate and point exchange. Coordinate exchange usually wins out, but not always due to the random seed in the selection algorithm. I did not want to take that chance.)

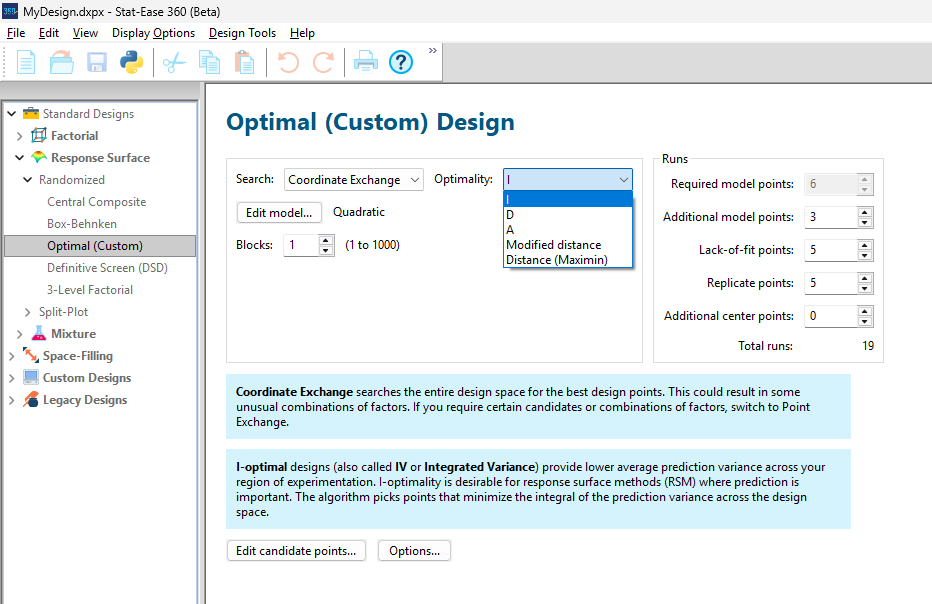

As shown in Figure 1, I added 3 additional model points for increased precision and kept the default numbers of 5 each for the lack-of-fit and replicate points.

Figure 1: Set up for three alternative designs—I (default) versus D versus modified distance

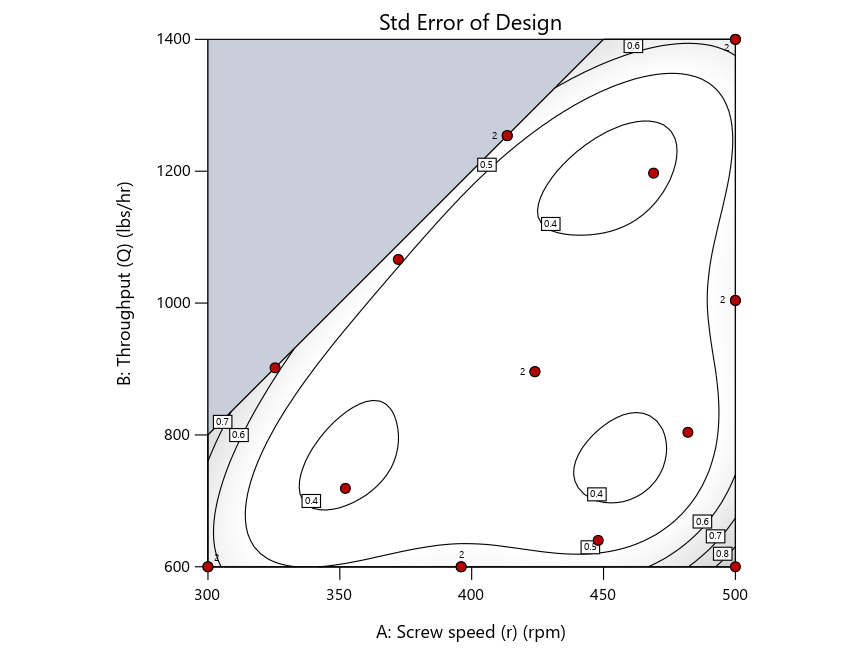

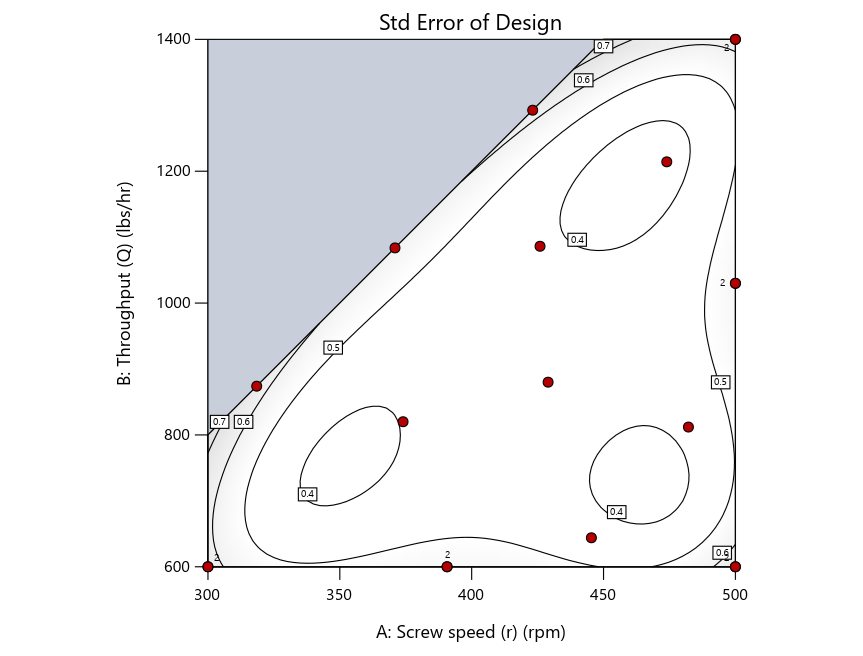

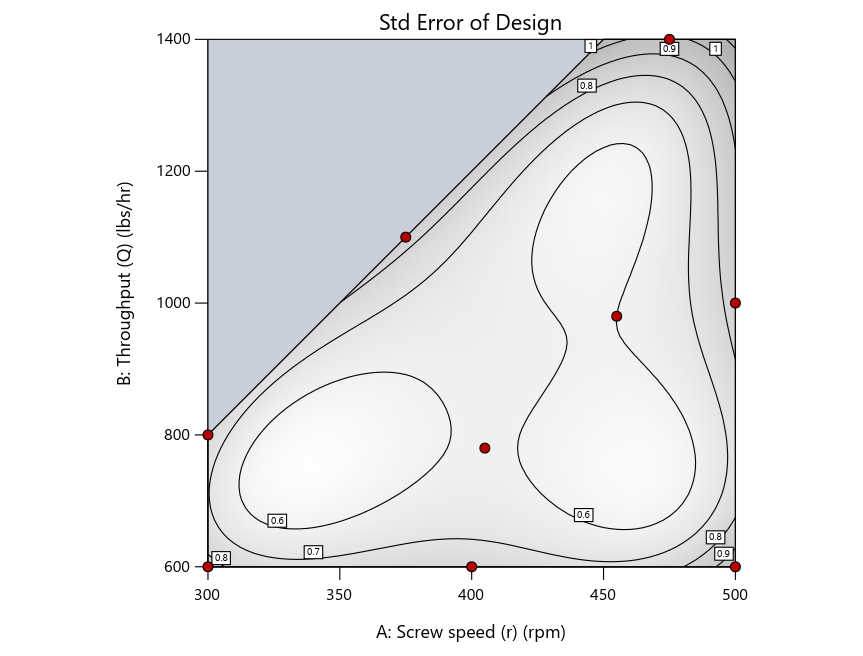

As seen in Figure 2’s contour graphs produced by Stat-Ease software’s design evaluation tools for assessing standard error throughout the experimental region, the differences in point location are trivial for only two factors. (Replicated points display the number 2 next to their location.)

Figure 2: Designs built by I vs D vs modified distance including 5 lack-of-fit points (left to right)

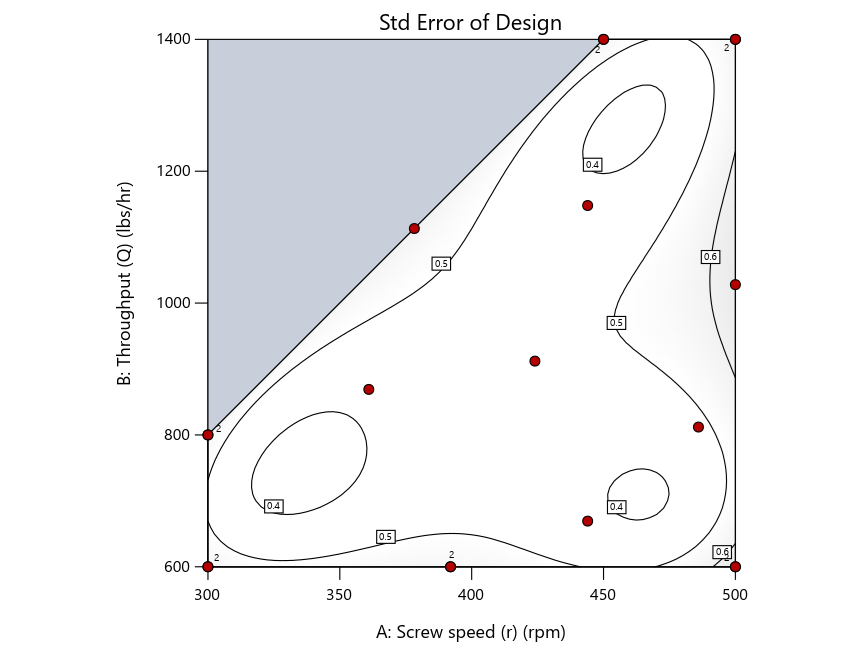

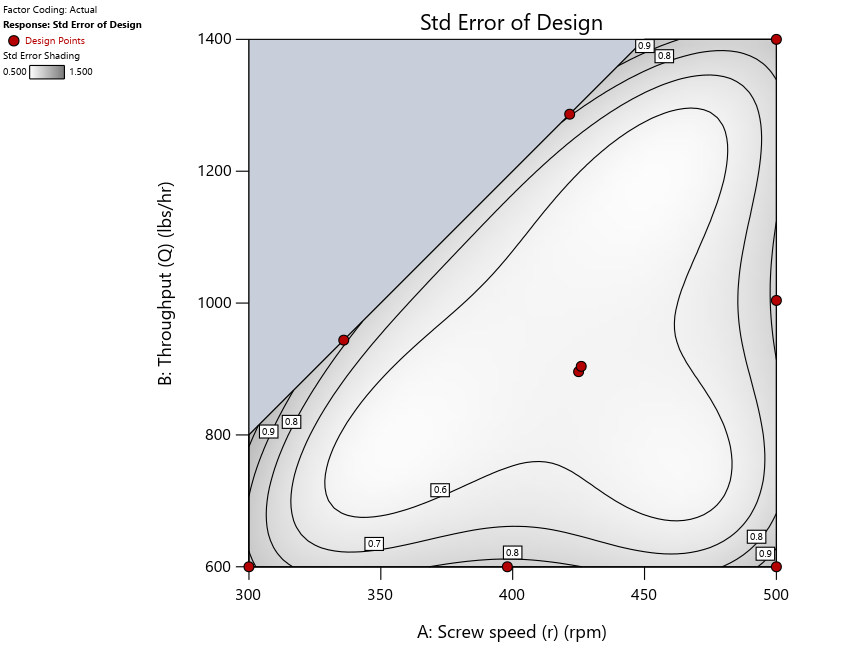

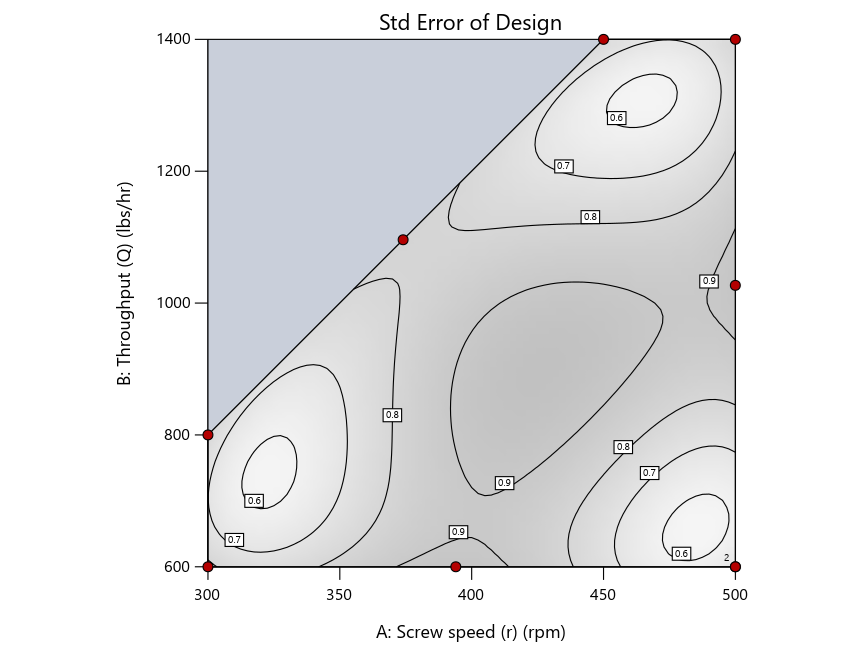

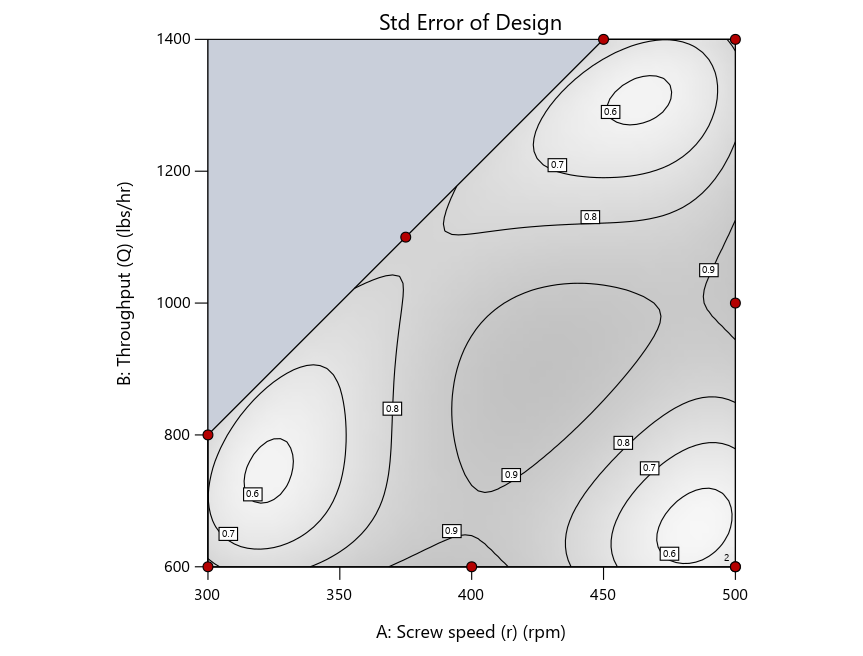

Keeping in mind that, due to the random seed in our algorithm, run-settings vary when rebuilding designs, I removed the lack-of-fit points (and replicates) to create the graphs in Figure 2.

Figure 3: Designs built by I vs D vs modified distance excluding lack-of-fit points (left to right)

Now you can see that D-optimal designs put points around the outside, whereas I-optimal designs put points in the interior, and the space-filling criterion spreads the points around. Due to the lack of points in the interior, the D-optimal design in this scenario features a big increase in standard error as seen by the darker shading—a very helpful graphical feature in Stat-Ease software. It is the loser as a criterion for a custom RSM design. The I-optimal wins by providing the lowest standard error throughout the interior as indicated by the light shading. Modified distance base selection comes close to I optimal but comes up a bit short—I award it second place, but it would not bother me if a user liking a better spread of their design points make it their choice.

In conclusion, as I advised in my DOE FAQ Alert, to keep things simple, accept the Stat-Ease software custom-design defaults of I optimality with 5 lack-of-fit points included and 5 replicate points. If you need more precision, add extra model points. If the default design is too big, cut back to 3 lack-of-fit points included and 3 replicate points. When in a desperate situation requiring an absolute minimum of runs, zero out the optional points and ignore the warning that Stat-Ease software pops up (a practice that I do not generally recommend!).

A practical tip for point selection

Look closely at the I-optimal design created by coordinate exchange in Figure 3 on the left and notice that two points are placed in nearly the same location (you may need a magnifying glass to see the offset!). To avoid nonsensical run specifications like this, I prefer to force the exchange algorithm to point selection. This restricts design points to a geometrically registered candidate set, that is, the points cannot move freely to any location in the experimental region as allowed by coordinate exchange.

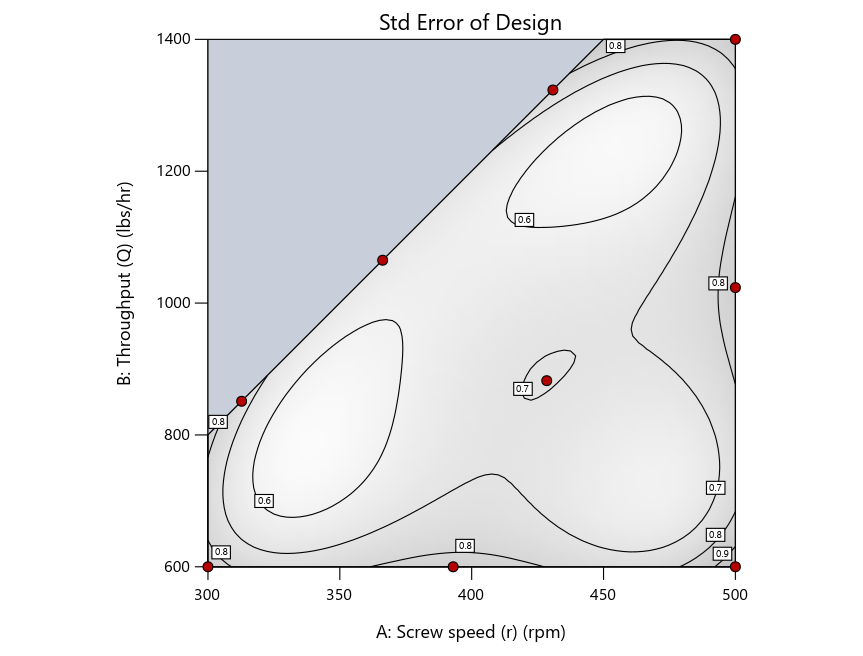

Figure 4 shows the location of runs for the reactive-extrusion experiment with point selection specified.

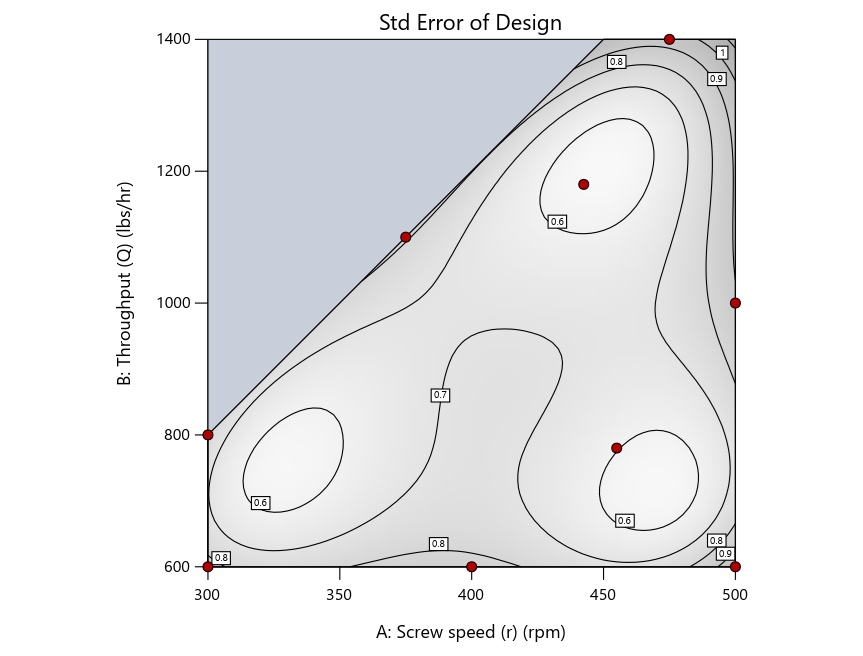

Figure 4: Designs built by I vs D vs modified distance by point exchange (left to right)

The D optimal remains a bad choice—the same as before. The edge for I optimal over modified distance narrows due to point exchange not performing quite as well for as coordinate exchange.

As an engineer with a wealth of experience doing process development, I like the point exchange because it:

- Reaches out for the ‘corners’—the vertices in the design space,

- Restricts runs to specific locations, and

- Allows users to see where they are by showing space point type on the design layout enabled via a right-click over the upper left corner.

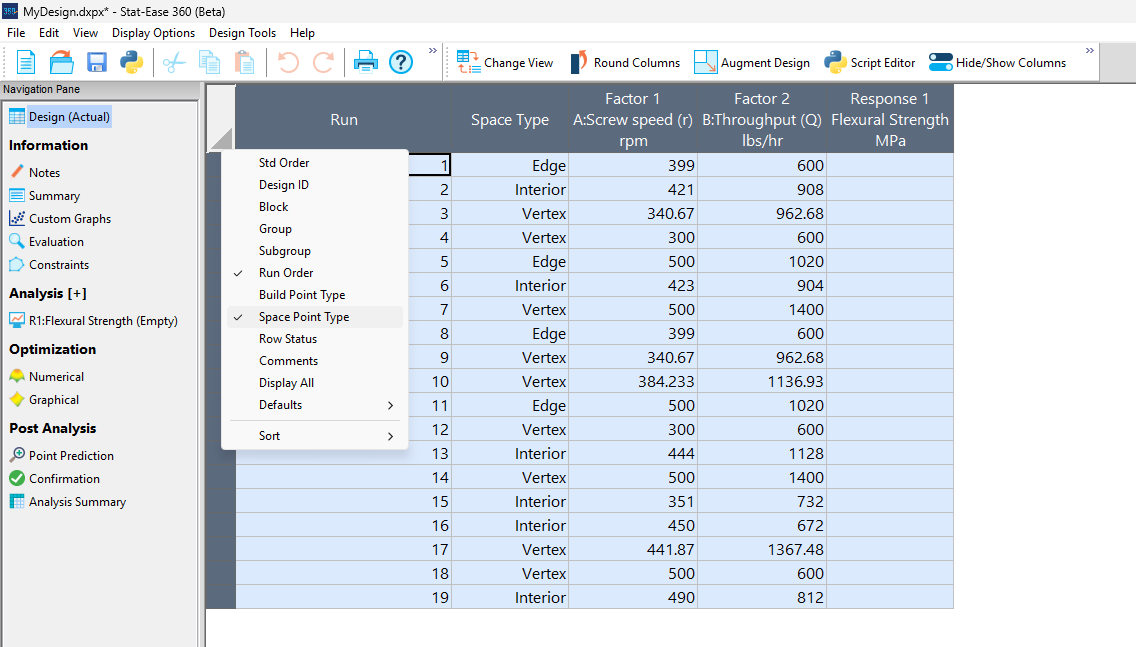

Figures 5a and 5b illustrate this advantage of point over coordinate exchange.

Figure 5a: Design built by coordinate exchange with Space Point Type toggled on

On the table displayed in Figure 5a for a design built by coordinate exchange, notice how points are identified as “Vertex” (good the software recognized this!), “Edge” (not very specific) and “Interior” (only somewhat helpful).

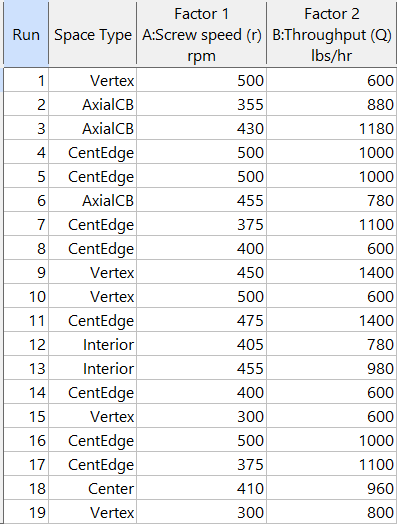

Figure 5b: Design built by point exchange with Space Point Type shown

As shown by Figure 5b, rebuilding the design via point exchange produces more meaningful identification of locations (and better registered geometrically): “Vertex” (a corner), “CentEdge” (center of edge—a good place to make a run), “Center” (another logical selection) and “Interior” (best bring up the contour graph via design evaluation to work out where these are located—click any point to identify them by run number).

Full disclosure: There is a downside to point exchange—as the number of factors increases beyond 12, the candidate set becomes excessive and thus the build takes more time than you may be willing to accept. Therefore, Stat-Ease software recommends going only with the far faster coordinate exchange. If you override this suggestion and persist with point exchange, no worries—during the build you can cancel it and switch to coordinate exchange.

Final words

A fellow chemical engineer often chastised me by saying “Mark, you are overthinking things again.” Sorry about that. If you prefer to keep things simple (and keep statisticians happy!), go with the Stat-Ease software defaults for optimal designs. Allow it to run both exchanges and choose the most optimal one, even though this will likely be the coordinate exchange. Then use the handy Round Columns tool (seen atop Figure 5a) to reduce the number of decimal places on impossibly precise settings.

Like the blog? Never miss a post - sign up for our blog post mailing list.